What's the difference between Ingestion Time and Timestamp in CloudWatch Logs Insights?

- Authors

- Name

- Tomasz Łakomy

- @tlakomy

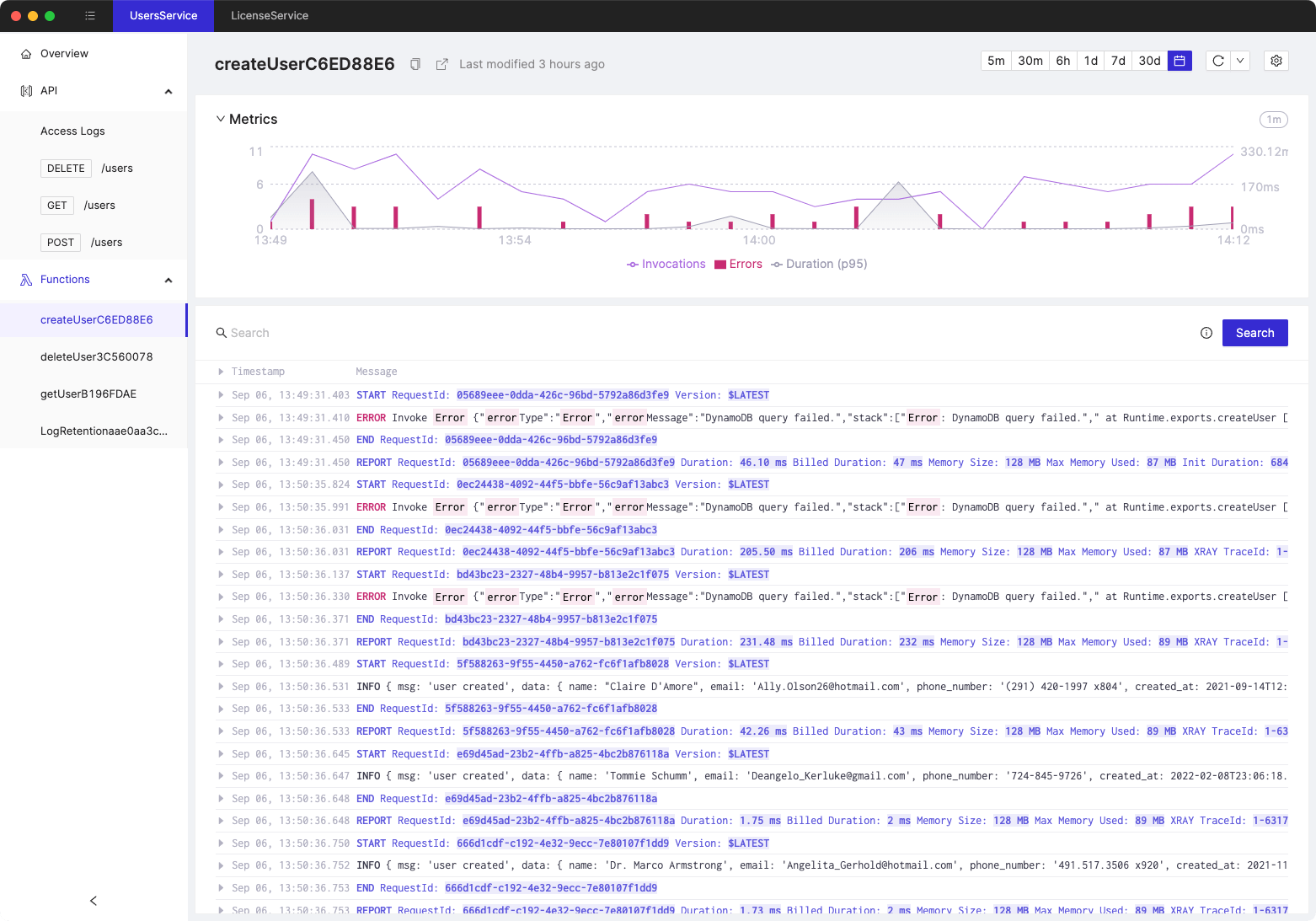

Let's start with a following query to see the difference between Ingestion Time and Timestamp in CloudWatch Logs Insights:

fields @timestamp, @ingestionTime

| sort @timestamp desc

| limit 20

The output will look like this:

As you can see, in this example the @ingestionTime time is exactly the same for all log entries (the actual log messages are not relevant in this example), but the @timestamp value differs for each log entry.

Why is that?

The @timestamp is the time the event was said to have occurred, according to the message that was sent to CloudWatch.

The @ingestionTime is the time CloudWatch actually received the message about the event.

Bear in mind that there's always a delay between the time that an event occurs and the time when CloudWatch received and "ingested" the message about the event.

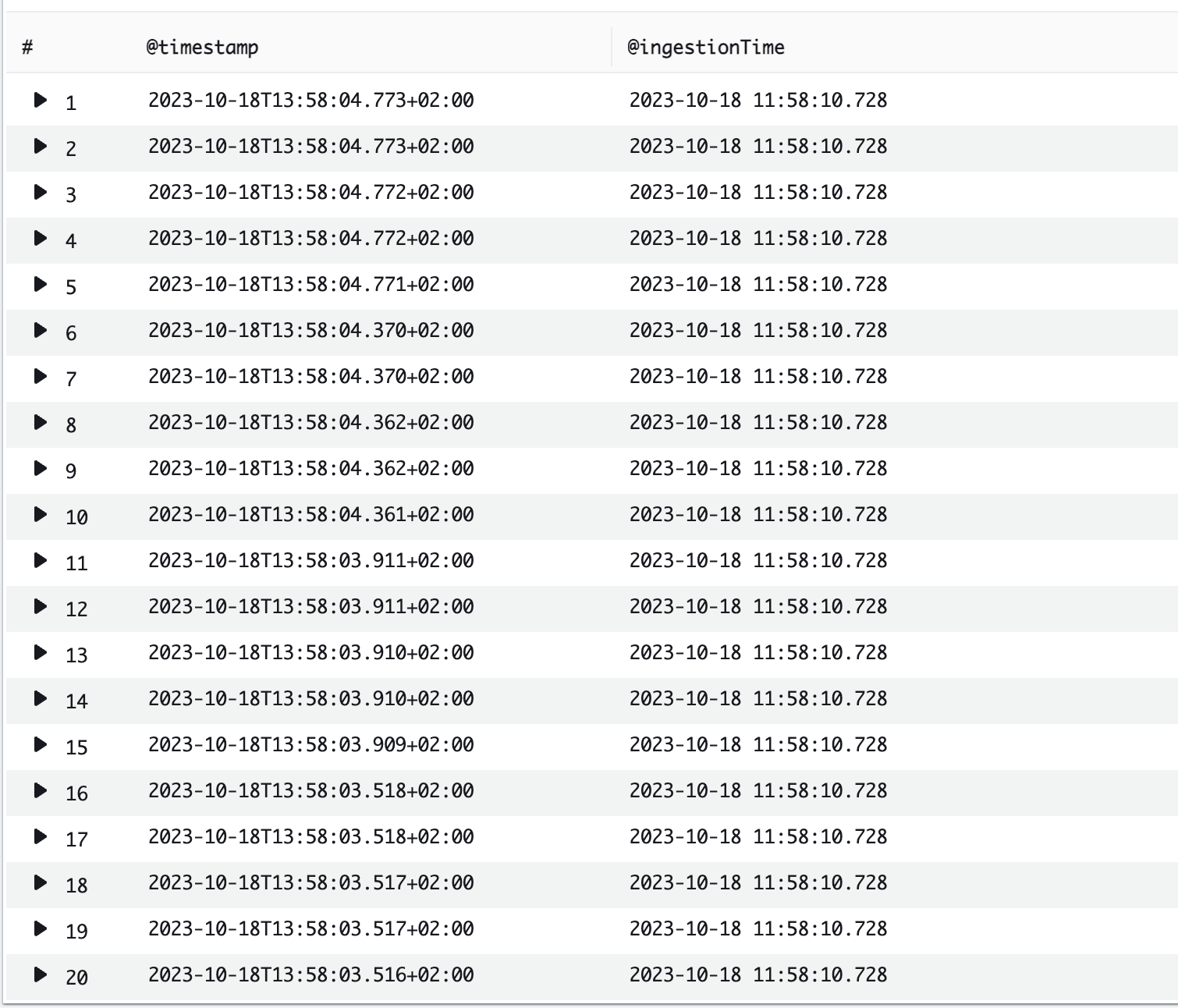

You may notice one more thing in the screenshot above - the @timestamp is in your local timezone (I happen to live in GTM+2), while the @ingestionTime is in UTC. Bear in mind that this is just the effect of AWS Console UI helping us a little, both of those values are expressed as the number of milliseconds after Jan 1, 1970 00:00:00 UTC, for instance:

@ingestionTime 1697630421207

@timestamp 1697630414311

FWIW I'd prefer if both of those values were displayed in UTC by default. If you prefer a local timezone though, there's a trick that can help you - fromMillis function.

Let's update our query:

fields @timestamp, fromMillis(@ingestionTime)

| sort @timestamp desc

| limit 20

The output will look like this:

As you can see, the @ingestionTime is now displayed in my local timezone. This can be helpful if you'd like to avoid doing timezone math in your head (or if you'd like the displayed values to be more consistent).

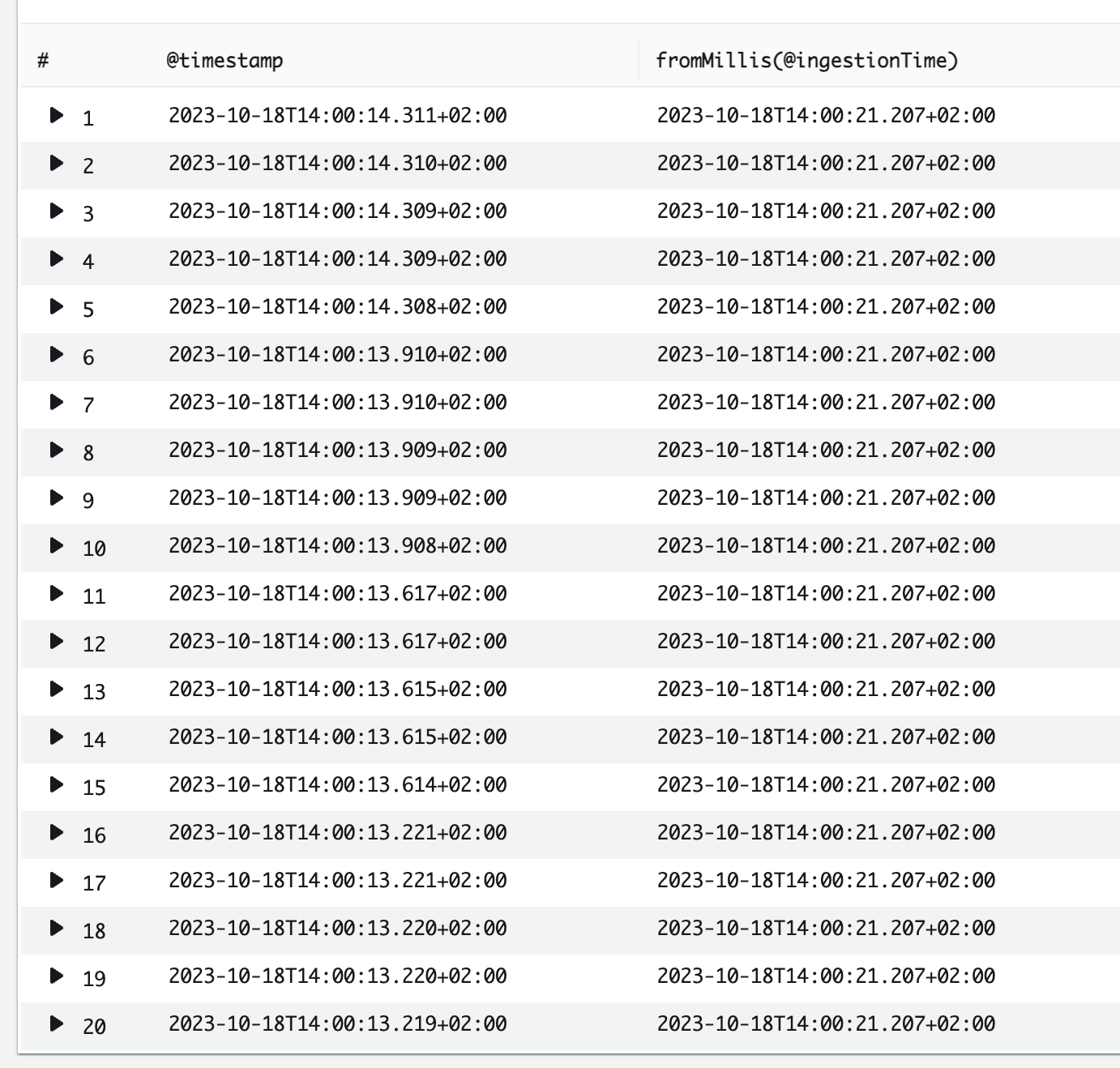

Tired of switching between AWS console tabs? 😒

Cloudash provides clear access to CloudWatch logs and metrics, to help you make quicker decisions.

Try it for free: